A github project to open vscode in parallel with the Delphi IDE, to take advantage of copilot.

Impressive! Not…

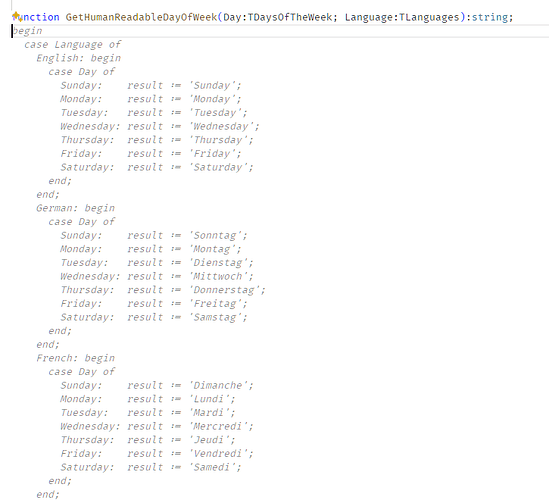

Well, I didn’t think of it when I copied that over from the github … but the code has the assignment operators all aligned, so I’m gunna have to give it points. ![]()

It’s a good example of how these assistants do exactly what you probably told them without what a decent human developer would do and think about the best solution. Because this is probably not how you would do localization in your application.

And even if you would these nested case statements over an enum make my brain hurt. This shows that Co-pilot knows nothing about the full Delphi language features.

IMHO (feel free to disagree) at the current state of “AI” (yes, I put this in parentheses) this will just lead to worse code than we had with “copied from StackOverflow” in the past.

To me, AI at the moment is like having an inexperienced junior developer hanging around and giving it their best shot. Sometimes they come up with something which has flashes of inspiration and you’re both impressed and a little bit surprised - but a lot of the time, in fact more often than not, it’s not that great.

There’s a long way to go - but at least right now there is some progress. Right now for anyone with sufficient coding skills the AI can be helpful… or make things worse and take longer - just like farming out a job to a junior coder.

The issue is with AI being integrated directly into tools of all sorts because there is no clear distinction anymore between when something is being generated as the result of some deterministic algorithm or some LLM hallucinating something.

And this inevitably leads to situations worse than letting juniors commit source code to prod without a proper review.

Sure. I don’t think the point here is “How to perform localisation”.

It was just demonstrating a facility to have the computer type what “you” were gunna type anyway.

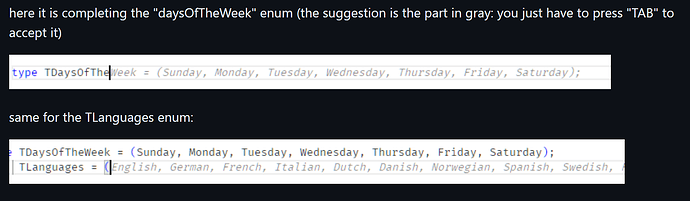

The above is in the context of it having already done this :

I think asking a LLM for a strategy to do internationalisation is a different thing.

I haven’t used it, I don’t know what it would generally produce for Delphi code … but it’s something for us to know about.

AI in this case is a really just fancy predictive text, which you can either accept with a tab or just type over the top. Visual Studio/ReSharper has this live. If it gets it right 1 out of 5 times it’s a help, because I can choose to ignore it (so no harm done). It will only ever improve marginally, no matter how much effort they put into it, because second-guessing what a developer wants to do, when often the developer is still thinking about what they want to do, is only going to get you so far. I like it, but it’s hardly earth shattering (it saves me some typing)

because second-guessing what a developer wants to do, when often the developer is still thinking about what they want to do

I suppose the true measure of AI would be if, once you have thought about what you want to do, that you find it already entered for you.

Perhaps we finally have the lead in to the ad where Jack Thompson says “Now we can all get some sleep!”

Richard Feldman talked about copilot somewhere in this discussion. How Programming has Changed with Conor Hoekstra - Software Unscripted | Podcast on Spotify

He said having an eager beaver ai trying to pop up programming ideas was distracting and broke his flow.

But when he was observing / mentoring someone else, he was in ‘code review mode’ all the time, so the suggestions weren’t breaking his concentration.

FYI After researching and trying some AI coding assistants I’m working on a RAD Studio plugin for Codeium which will be published on github. It is currently a POC to check editor features - basic plugin behaviour is working, can auto insert, highlight and remove mock completion code. I need to polish that a bit and work on the Codeium integration. Progress will be slow but I’m committed to get it working.

Codeium has a few advantages over Copilot. I won’t go into all the details (available in the marketing on their website) but the completions are much better than Copilot and it’s free. I tried contacting Codeium to get some insight, let them know about the plugin, etc. to no avail.

As for the merits of AI coding assistants, I think of it more like the current live templates but on steroids. Instead of having some pre-defined template inserted in your code (in some limited scenarios) and having to fill in the blanks (like a for in loop variable and list names), the asistant will see that you just retrieved a list of things and when you type “for” will suggest code to iterate over them. There are much more complex completions and, like Misha, I think that if it is often helpful then it is useful. You will likely start to learn when it is helpful and when it is not, and just keep typing.

Aside from code completion, Codeium and Copilot and other assistants also have other features, like code generation (like how we orignally were asking ChatGPT to create code with prompts except these tools are more context aware) and code analysis (explain/simplify this code, is this secure, write a unit test, etc).

The usefulness of these tools will only improve as they take in more context from your code (some can do multi-repos), are better at learning your coding style, are trained on larger and more up to date code bases, get faster (Codeium has lower latency than most other tools), can present multiple alternatives to generated code, can be tuned to your preferences, etc.

(Putting aside any security concerns in sharing your programming content …)

I have been wondering about how the AI-in-my-browser works with the content transfer limits that the LLMs quite necessarily have.

When you ask ChatGPT or Gemini as question in a browser, there’s only so much data flowing across.

If you have a medium-sized application … how much data is transferred back and forth to the LLM, how much repetition is involved, and how can it be optimised to the most efficient process while still giving useful results ?

Remember Microsoft Clippy?

AI assistants, done badly, will be this; history repeating itself. The reasons people hated Clippy were manifold, a lot to do with its over-eager conversational style, but also because it acted like a nosey person looking over your shoulder “heyyyyy. whatch doin?”

It also was fairly limited in the exact help it would or wouldn’t give.

If you fall out of love with an AI ‘assistant’ which has a personality then it’s doomed, no matter how useful it might be. Cortana… no, Alexa… gets shouted at a lot…

If AI is going to be in the IDE it needs to earn its place and work with coders to make their day better - and you never make a coder’s day better by interrupting his or her flow with nonsense.

Ironically, Clippy is also the name of a well-respected Rust IDE linter/helper … but not ai-related. ![]()

In the browser you are limited to the allowed, and periodically increasing, token count of the LLM. You can provide a good chunk of code for context but after that, in regular use, the LLM has to rely on its training. Some LLMs are starting to get memory across sessions, and many promise not to use your code in their general training.

The next step up is to use AI tools that can scan all of your code (or other content) and index it in a vector database. This can then be used to retrieve portions of relevant code to use as the context. This is built into Copilot, Codeium and some similar tools, or you can combine open source tools to achieve the same result. The extent of the indexing varies from one tool to another.

Codeium has an offering (not free) to train on your codebase, and on the security concerns, even run in a private instance if you really don’t want any chance of your code leaving your organisation.